Of all the scary powers of the internet, it’s ability to trick the unsuspecting might be the most frightening. Clickbait, photoshopped pictures and false news are some of the worst offenders, but recent years have also seen the rise of a new potentially dangerous tool known as deepfake artificial intelligence (AI).

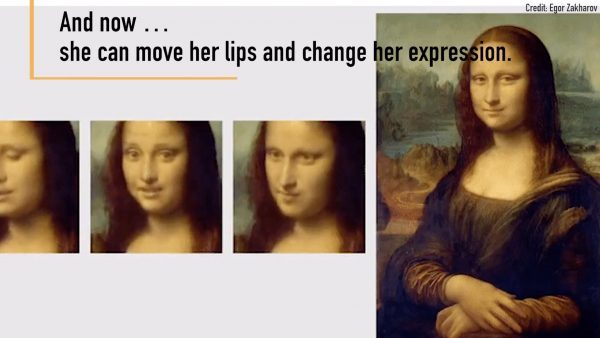

The term deepfake refers to counterfeit, computer-generated video and audio that is hard to distinguish from genuine, unaltered content. It is to film what Photoshop is for images.

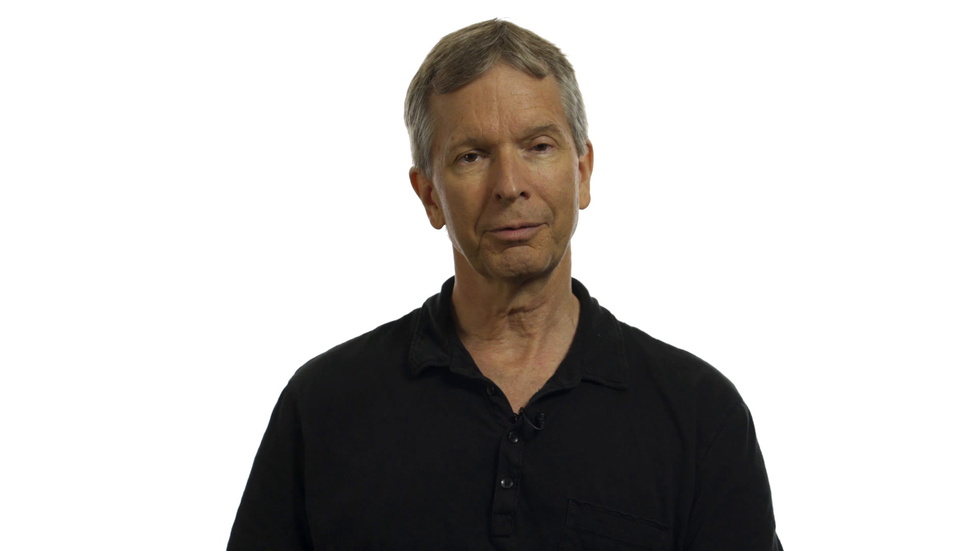

The tool relies on what’s known as generative adversarial networks (GANs), a technique invented in 2014 by Ian Goodfellow, a Ph.D. student who now works at Apple, Popular Mechanics reported.

The GAN algorithm involves two separate AIs, one that generates content — let’s say, photos of people — and an adversary that tries to guess whether the images are real or fake, according to Vox. The generating AI starts off with almost no idea how people look, meaning its partner can easily distinguish true photos from false ones. But over time, each type of AI get progressively better, and eventually the generating AI begins producing content that looks perfectly life-like.

This article was originally posted on Queer SF